AWS Certified Developer - Associate - Exam Simulator

DVA-C02

Unlock your potential as a software developer with the AWS Certified Developer - Associate Exam Simulator! Prepare thoroughly with realistic practice exams designed to mirror the official exam.

Questions update: Jun 06 2024

Questions count: 3787

Example questions

Domains: 4

Tasks: 13

Services: 55

The AWS Certified Developer - Associate certification is challenging due to its comprehensive coverage of AWS services and its focus on the practical application of development principles within the AWS ecosystem. This certification tests your ability to design, develop, and deploy cloud-based applications on AWS, requiring a solid understanding of core AWS services and best practices for development and architecture.

The certification exam emphasizes an understanding of key AWS services such as EC2, S3, DynamoDB, Lambda, API Gateway, RDS, and others. You need to know how these services work, how to configure them, and how to integrate them into applications.

You have to be able to solve real-world problems, including designing scalable and resilient applications, troubleshooting issues, and optimizing performance and cost. Understanding deployment and monitoring practices is also essential, including familiarity with CI/CD (Continuous Integration and Continuous Deployment) pipelines using AWS services like CodeCommit, CodeBuild, CodeDeploy, and CodePipeline.

Security is another critical aspect. Candidates must know how to implement security best practices, including identity and access management, encryption, and securing data in transit and at rest. They should be able to use IAM roles and policies effectively to secure their applications.

The certification also requires knowledge of AWS's global infrastructure, including regions and availability zones, and how to design applications that take advantage of this infrastructure for high availability and fault tolerance.

Furthermore, the exam demands familiarity with microservices architecture and the ability to implement serverless applications using AWS Lambda and other related services. This involves understanding the benefits and challenges of microservices and how to manage them on AWS.

The AWS Certified Developer - Associate certification is far more challenging than the AWS Certified Cloud Practitioner because it requires a comprehensive understanding of AWS services and the ability to apply best practices in security, architecture, and deployment. However, it is considered easier than the AWS Certified Solutions Architect - Associate because it encompasses a shorter exam scope.

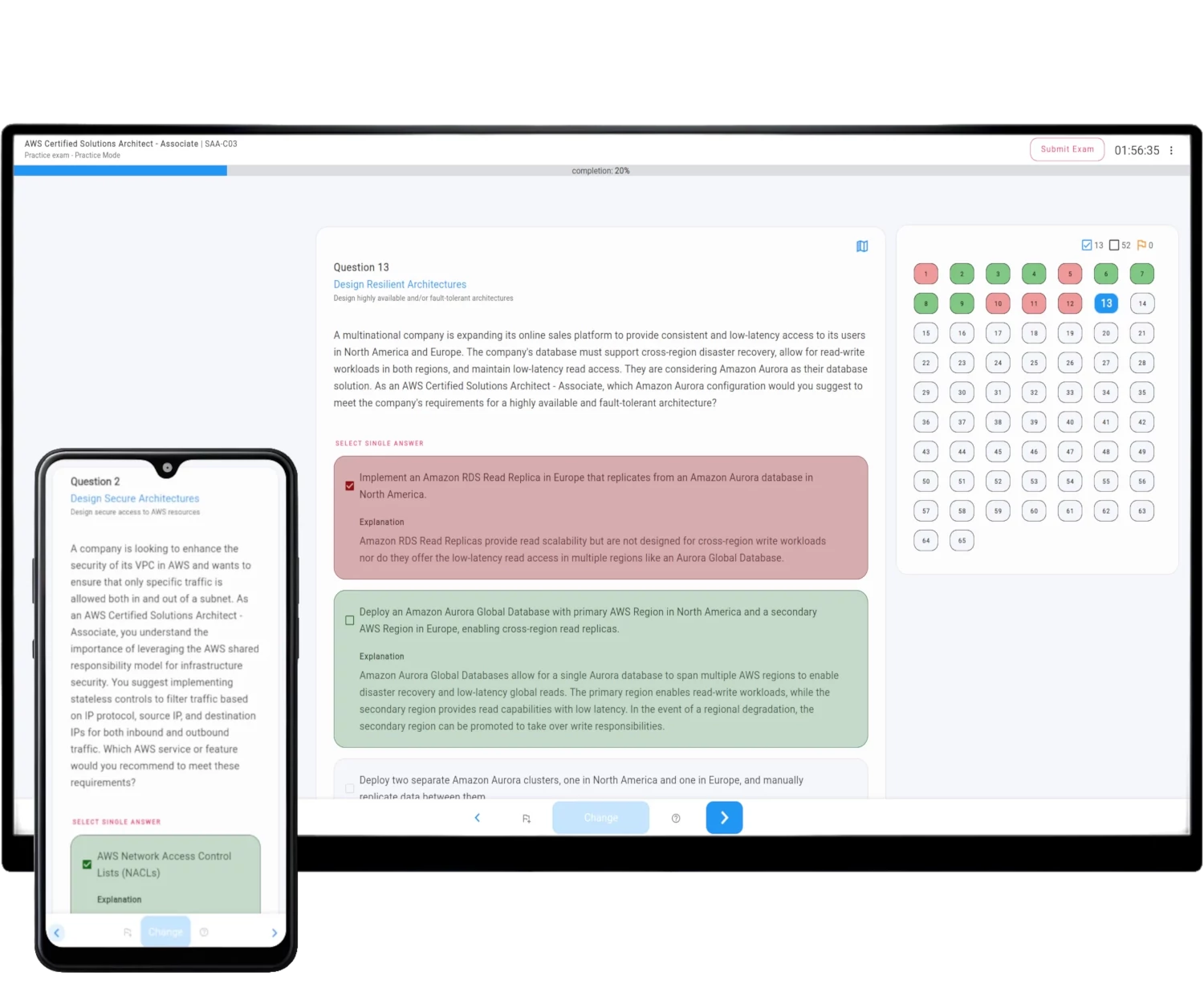

How AWS Exam Simulator works

The Simulator generates on-demand unique practice exam question sets fully compatible with the selected AWS Official Certificate Exam.

The exam structure, difficulty requirements, domains, and tasks are all included.

Rich features not only provide you with the same environment as your real online exam but also help you learn and pass AWS Certified Developer - Associate - DVA-C02 with ease, without lengthy courses and video lectures.

See all features - refer to the detailed description of AWS Exam Simulator description.

| Exam Mode | Practice Mode | |

|---|---|---|

| Questions count | 65 | 1 - 75 |

| Limited exam time | Yes | An option |

| Time limit | 130 minutes | 10 - 200 minutes |

| Exam scope | 4 domains with appropriate questions ratio | Specify domains with appropriate questions ratio |

| Correct answers | After exam submission | After exam submission or after question answer |

| Questions types | Mix of single and multiple correct answers | Single, Multiple or Both |

| Question tip | Never | An option |

| Reveal question domain | After exam submission | After exam submission or during the exam |

| Scoring | 15 from 65 questions do not count towards the result | Official AWS Method or mathematical mean |

Exam Scope

The Practice Exam Simulator questions sets are fully compatible with the official exam scope and covers all concepts, services, domains and tasks specified in the official exam guide.

For the AWS Certified Developer - Associate - DVA-C02 exam, the questions are categorized into one of 4 domains: Development with AWS Services, Security, Deployment, Troubleshooting and Optimization, which are further divided into 13 tasks.

AWS structures the questions in this way to help learners better understand exam requirements and focus more effectively on domains and tasks they find challenging.

This approach aids in learning and validating preparedness before the actual exam. With the Simulator, you can customize the exam scope by concentrating on specific domains.

Exam Domains and Tasks - example questions

Explore the domains and tasks of AWS Certified Developer - Associate - DVA-C02 exam, along with example questions set.