AWS Certified Solutions Architect - Associate - Exam Simulator

SAA-C03

Unlock your potential with the AWS Certified Solutions Architect - Associate Practice Exam Simulator. This comprehensive tool is designed to prepare you thoroughly and assess your readiness for the most sought-after AWS associate certification.

Questions update: Jun 13 2024

Questions count: 3813

Example questions

Domains: 4

Tasks: 15

Services: 126

The AWS Certified Solutions Architect - Associate exam's difficulty is significantly influenced by the extensive scope of topics it covers. This wide-ranging content demands a thorough understanding of many AWS services and their integration, which can be daunting, especially for those new to AWS or cloud computing.

The exam covers key areas including compute, storage, databases, networking, security, and application architecture. For compute, you need to understand services like EC2, which involves knowledge of instance types, pricing models, and scaling strategies. You must also be familiar with Lambda, which introduces a serverless paradigm that requires a different approach to architecture and deployment compared to traditional server-based models.

Storage is another critical domain, with services like S3 and EBS. Understanding S3 includes grasping its storage classes, bucket policies, and lifecycle management, while EBS requires knowledge of volume types, performance characteristics, and backup solutions. Databases on AWS span both relational (RDS) and non-relational (DynamoDB) systems, each with its own configuration, scaling, and maintenance considerations.

Networking on AWS involves VPC, subnets, routing tables, and gateways, which are foundational to designing secure and efficient cloud architectures. You must understand how to set up and manage these components to ensure proper isolation, connectivity, and security. Security itself is a vast topic, covering IAM (Identity and Access Management), encryption, and compliance requirements. Mastery of these elements is crucial, as security misconfigurations can lead to vulnerabilities.

The application architecture domain requires knowledge of how to design distributed systems that are highly available, fault-tolerant, and scalable. This involves using load balancers, auto-scaling groups, and designing stateless applications. Moreover, understanding the cost implications of architectural decisions is vital for optimizing performance while managing expenses.

The interrelated nature of these topics adds to the complexity. For instance, designing a fault-tolerant application involves not just compute and storage considerations but also networking and security configurations.

Effective preparation for the exam requires an integrated understanding of how these services work together to build robust, efficient, and secure solutions.

The breadth and depth of knowledge required across these domains, coupled with the need to stay updated with AWS’s frequent service updates and enhancements, contribute significantly to the exam's difficulty.

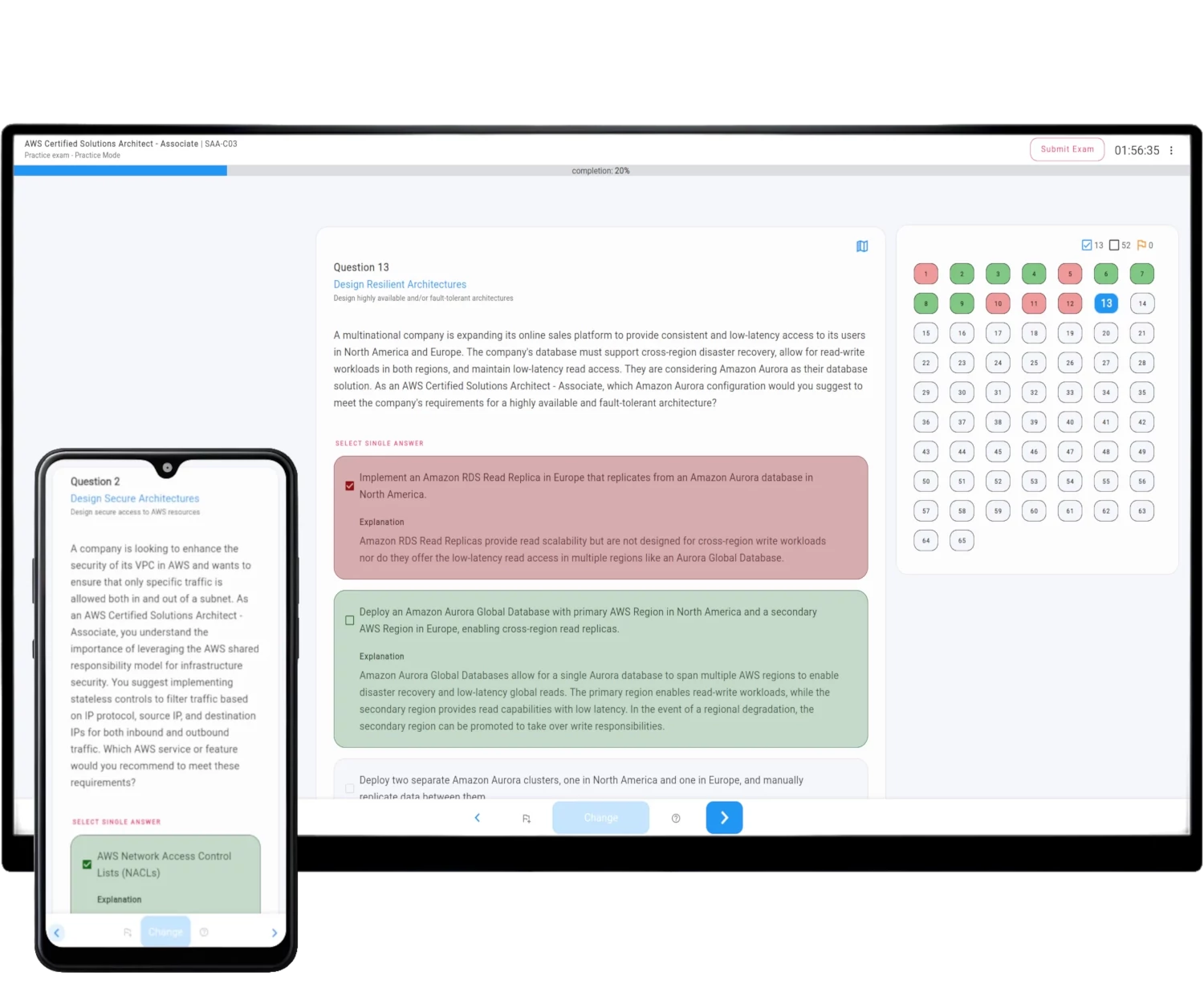

How AWS Exam Simulator works

The Simulator generates on-demand unique practice exam question sets fully compatible with the selected AWS Official Certificate Exam.

The exam structure, difficulty requirements, domains, and tasks are all included.

Rich features not only provide you with the same environment as your real online exam but also help you learn and pass AWS Certified Solutions Architect - Associate - SAA-C03 with ease, without lengthy courses and video lectures.

See all features - refer to the detailed description of AWS Exam Simulator description.

| Exam Mode | Practice Mode | |

|---|---|---|

| Questions count | 65 | 1 - 75 |

| Limited exam time | Yes | An option |

| Time limit | 130 minutes | 10 - 200 minutes |

| Exam scope | 4 domains with appropriate questions ratio | Specify domains with appropriate questions ratio |

| Correct answers | After exam submission | After exam submission or after question answer |

| Questions types | Mix of single and multiple correct answers | Single, Multiple or Both |

| Question tip | Never | An option |

| Reveal question domain | After exam submission | After exam submission or during the exam |

| Scoring | 15 from 65 questions do not count towards the result | Official AWS Method or mathematical mean |

Exam Scope

The Practice Exam Simulator questions sets are fully compatible with the official exam scope and covers all concepts, services, domains and tasks specified in the official exam guide.

For the AWS Certified Solutions Architect - Associate - SAA-C03 exam, the questions are categorized into one of 4 domains: Design Secure Architectures, Design Resilient Architectures, Design High-Performing Architectures, Design Cost-Optimized Architectures, which are further divided into 15 tasks.

AWS structures the questions in this way to help learners better understand exam requirements and focus more effectively on domains and tasks they find challenging.

This approach aids in learning and validating preparedness before the actual exam. With the Simulator, you can customize the exam scope by concentrating on specific domains.

Exam Domains and Tasks - example questions

Explore the domains and tasks of AWS Certified Solutions Architect - Associate - SAA-C03 exam, along with example questions set.