AWS Certified Solutions Architect - Professional - Exam Simulator

SAP-C02

Elevate your career with the AWS Certified Solutions Architect - Professional Exam Simulator. Get ready to ace the most popular Professional AWS exam with our realistic practice exams. Assess your readiness, boost your confidence, and ensure your success.

Questions update: Jun 06 2024

Questions count: 8203

Example questions

Domains: 4

Tasks: 20

Services: 154

The AWS Certified Solutions Architect - Professional (SAP-C02) exam is considered one of the most difficult of all AWS certification exams.

The difficulty of the exam stems from several key factors. First, the breadth and depth of knowledge required are substantial. You must have a deep understanding of a wide range of AWS services, including, but not limited to, compute, storage, databases, networking, security, and application services. Additionally, you need to understand how these services integrate to form scalable, reliable, and cost-effective solutions.

Passing the exam requires a deep understanding of AWS best practices for architectural design. This includes knowledge of security, compliance, and governance as it pertains to AWS architectures. You need to be proficient in defining and designing architectures that adhere to AWS’s Well-Architected Framework, ensuring operational excellence, security, reliability, performance efficiency, and cost optimization.

Second, the exam emphasizes real-world scenarios and complex problem-solving. It tests your ability to design multi-tier applications, evaluate and recommend architectures for performance, security, and cost, and automate processes using AWS services. It demands a strong understanding of AWS architecture principles, service capabilities, and the ability to make trade-offs in design choices.

You must demonstrate your ability to design and deploy dynamically scalable, highly available, fault-tolerant, and reliable applications on AWS. This includes selecting appropriate AWS services to design and deploy applications based on specific requirements, migrating complex multi-tier applications to AWS, and implementing cost-control strategies.

Preparation for the exam requires extensive study. Many candidates spend months preparing, using a variety of resources such as AWS whitepapers, online courses, practice exams, and hands-on labs to gain the necessary knowledge and experience. However, doing practice exams with the AWS Exam Simulator and its premium features like repetitions and custom scope is often sufficient to pass this exam.

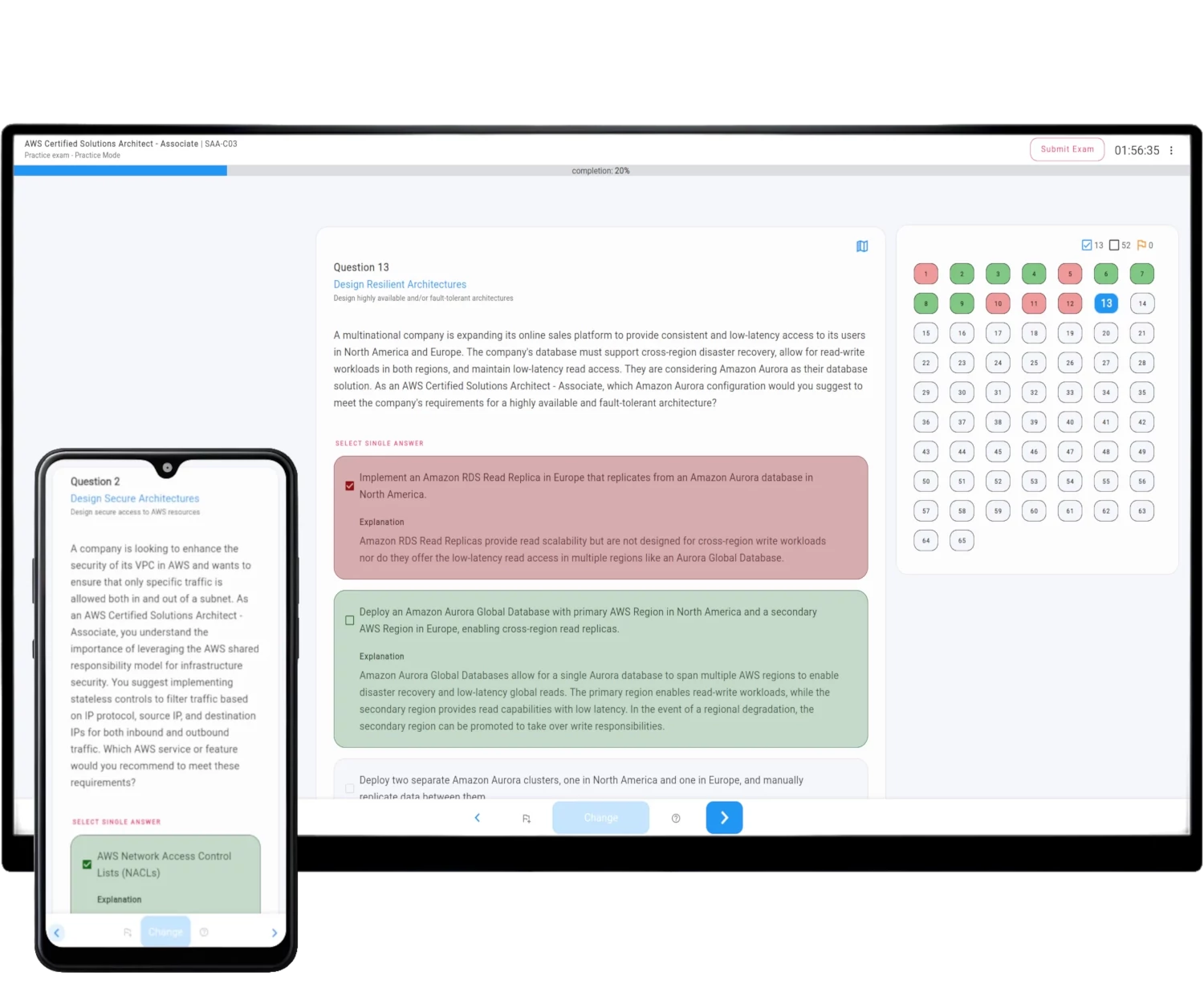

How AWS Exam Simulator works

The Simulator generates on-demand unique practice exam question sets fully compatible with the selected AWS Official Certificate Exam.

The exam structure, difficulty requirements, domains, and tasks are all included.

Rich features not only provide you with the same environment as your real online exam but also help you learn and pass AWS Certified Solutions Architect - Professional - SAP-C02 with ease, without lengthy courses and video lectures.

See all features - refer to the detailed description of AWS Exam Simulator description.

| Exam Mode | Practice Mode | |

|---|---|---|

| Questions count | 75 | 1 - 75 |

| Limited exam time | Yes | An option |

| Time limit | 180 minutes | 10 - 300 minutes |

| Exam scope | 4 domains with appropriate questions ratio | Specify domains with appropriate questions ratio |

| Correct answers | After exam submission | After exam submission or after question answer |

| Questions types | Mix of single and multiple correct answers | Single, Multiple or Both |

| Question tip | Never | An option |

| Reveal question domain | After exam submission | After exam submission or during the exam |

| Scoring | 15 from 75 questions do not count towards the result | Official AWS Method or mathematical mean |

Exam Scope

The Practice Exam Simulator questions sets are fully compatible with the official exam scope and covers all concepts, services, domains and tasks specified in the official exam guide.

For the AWS Certified Solutions Architect - Professional - SAP-C02 exam, the questions are categorized into one of 4 domains: Design Solutions for Organizational Complexity, Design for New Solutions, Continuous Improvement for Existing Solutions, Accelerate Workload Migration and Modernization, which are further divided into 20 tasks.

AWS structures the questions in this way to help learners better understand exam requirements and focus more effectively on domains and tasks they find challenging.

This approach aids in learning and validating preparedness before the actual exam. With the Simulator, you can customize the exam scope by concentrating on specific domains.

Exam Domains and Tasks - example questions

Explore the domains and tasks of AWS Certified Solutions Architect - Professional - SAP-C02 exam, along with example questions set.