AWS Certified DevOps Engineer - Professional - Exam Simulator

DOP-C02

Boost your readiness for the AWS Certified DevOps Engineer - Professional (DOP-C02) exam with our practice exam simulator. Featuring realistic questions and detailed explanations, it helps you identify knowledge gaps and improve your skills.

Questions update: Aug 08 2024

Questions count: 2775

Example questions

Domains: 6

Tasks: 19

Services: 101

The AWS Certified DevOps Engineer - Professional (DOP-C02) certification is designed for individuals with extensive experience in managing AWS environments. It assesses your ability to implement and manage continuous delivery systems and methodologies on AWS. Before attempting this certification, it is recommended to have a solid understanding of modern development and operations processes and methodologies, as well as experience in developing code in at least one high-level programming language and managing automated infrastructures.

The exam consists of 75 questions in multiple-choice and multiple-response formats. You have 180 minutes to complete the exam, which can be taken at a Pearson VUE testing center or online with a proctor. The cost of the exam is $300, and it is available in several languages, including English, Japanese, Korean, and Simplified Chinese. To pass, you need a score of 750 out of 1000.

This certification focuses on six main areas: automating the software development lifecycle (SDLC), managing infrastructure as code (IaC), creating resilient cloud solutions, monitoring and logging, incident and event response, and ensuring security and compliance. Key AWS services you need to be familiar with include AWS CodeCommit, AWS CodeBuild, AWS CodeDeploy, AWS CloudFormation, Amazon CloudWatch, AWS Lambda, and AWS IAM.

Preparing for this certification involves reviewing the official exam guide, which details the domains covered and their respective weightages. Practical experience is crucial, so setting up a lab environment to practice using AWS services is highly recommended. Additionally, enrolling in relevant training courses, such as those offered by experts like Stephane Maarek and Adrian Cantrill, can provide valuable insights and hands-on experience. Practice exams, like those by Jon Bonso, can help you get accustomed to the exam format and identify areas where you need to focus more.

Overall, achieving the AWS Certified DevOps Engineer - Professional certification demonstrates your proficiency in managing and automating AWS environments using DevOps principles, making it a valuable credential for advancing your career in cloud computing and DevOps.

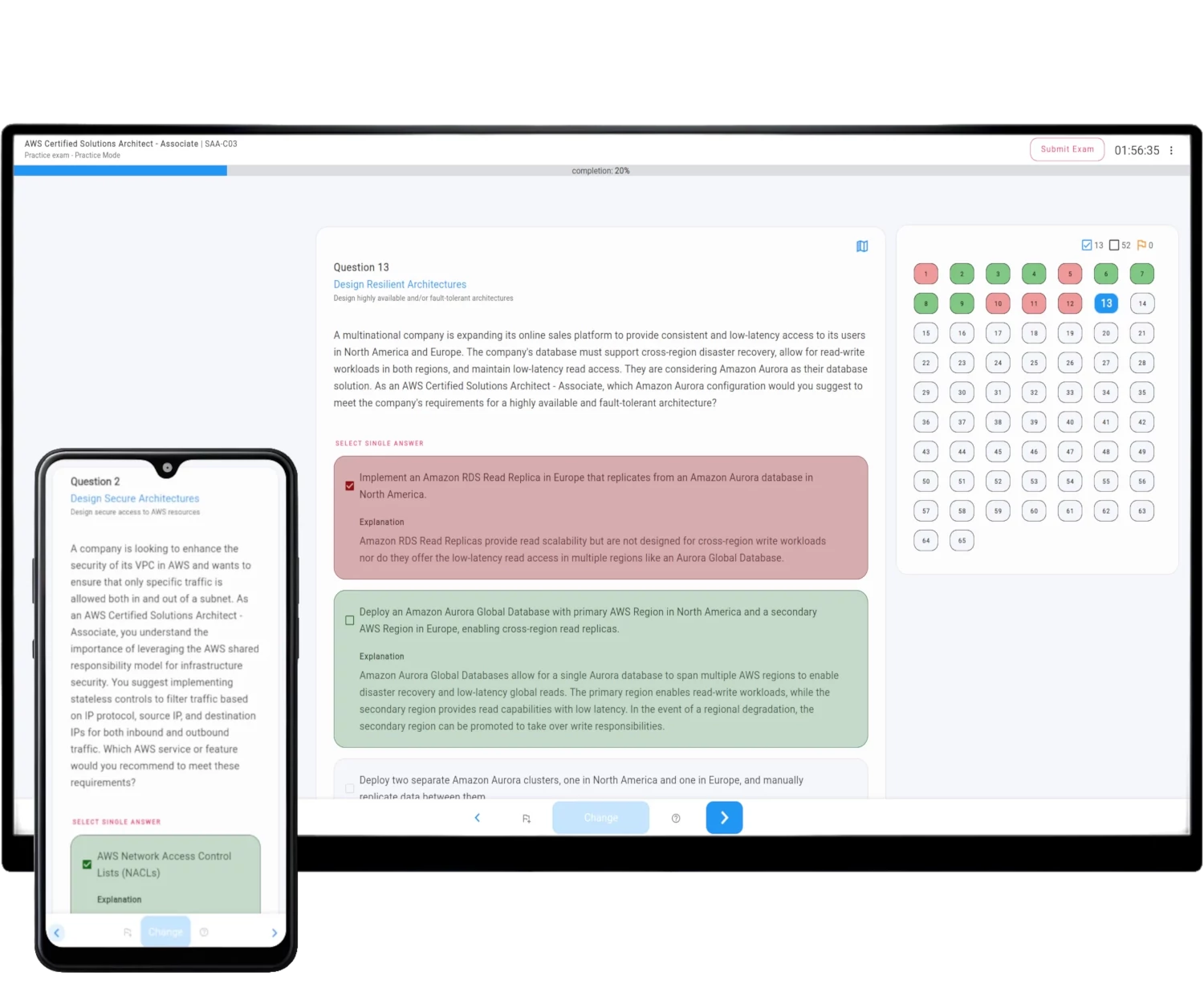

How AWS Exam Simulator works

The Simulator generates on-demand unique practice exam question sets fully compatible with the selected AWS Official Certificate Exam.

The exam structure, difficulty requirements, domains, and tasks are all included.

Rich features not only provide you with the same environment as your real online exam but also help you learn and pass AWS Certified DevOps Engineer - Professional - DOP-C02 with ease, without lengthy courses and video lectures.

See all features - refer to the detailed description of AWS Exam Simulator description.

| Exam Mode | Practice Mode | |

|---|---|---|

| Questions count | 75 | 1 - 75 |

| Limited exam time | Yes | An option |

| Time limit | 180 minutes | 10 - 200 minutes |

| Exam scope | 6 domains with appropriate questions ratio | Specify domains with appropriate questions ratio |

| Correct answers | After exam submission | After exam submission or after question answer |

| Questions types | Mix of single and multiple correct answers | Single, Multiple or Both |

| Question tip | Never | An option |

| Reveal question domain | After exam submission | After exam submission or during the exam |

| Scoring | 15 from 75 questions do not count towards the result | Official AWS Method or mathematical mean |

Exam Scope

The Practice Exam Simulator questions sets are fully compatible with the official exam scope and covers all concepts, services, domains and tasks specified in the official exam guide.

For the AWS Certified DevOps Engineer - Professional - DOP-C02 exam, the questions are categorized into one of 6 domains: SDLC Automation, Configuration Management and IaC, Resilient Cloud Solutions, Monitoring and Logging, Incident and Event Response, Security and Compliance, which are further divided into 19 tasks.

AWS structures the questions in this way to help learners better understand exam requirements and focus more effectively on domains and tasks they find challenging.

This approach aids in learning and validating preparedness before the actual exam. With the Simulator, you can customize the exam scope by concentrating on specific domains.

Exam Domains and Tasks - example questions

Explore the domains and tasks of AWS Certified DevOps Engineer - Professional - DOP-C02 exam, along with example questions set.